A game engine is a software designed to build video games. Developers use them to create games for consoles, mobile devices, and computers. The core functionality typically provided by a game engine includes a rendering engine (“renderer”) for 2D or 3D graphics, a physics engine or collision detection (and collision response), sound, scripting, animation, AI and may include video support for cinematics.

Two terms in the games industry that are closely related to game engines are “API” (application programming interface) and “SDK” (software development kit).

-APIs are the software interfaces that operating systems, libraries, and services provide so that you can take advantage of their particular features.

-An SDK is a collection of libraries, APIs, and tools that are made available for programming those same operating systems and services. Most game engines provide APIs in their SDKs.

Examples of Game Engines are:

Buildbox is a 2D game development engine that allows users to build simple games without any code. It offers a clean user interface where you can simply drag and drop design elements to create your very own game in no time. This can be used for both Android and iOS platform thus, it works perfectly as a cross-platform game development engine. It is ideal for designing simple games like ColorSwitch, The Line Zen, SKY etc. In fact, all these games were designed using BuildBox. On the contrary, the engine lacks 3D capabilities and you will have constraints in implementing features which are not available in the development console. Overall it is a good solution for non-programmers to create games.

- Unreal Engine (3D engine)

Unreal Engine is, graphically, the most powerful game engine on the market. Developed by Epic Games, although primarily developed for first-person shooters, it has been successfully used in a variety of other genres, including stealth, fighting games, MMORPGs, and other RPGs. With its code written in C++, the Unreal Engine features a high degree of portability and is a tool used by many game developers today. It has won several awards, including the Guinness World Records award for “most successful video game engine”.

Unreal Engine 4 is developed in house. The first version was released in 1998. This Engine was used to develop Unreal Tournament. Games like the Batman Arkham series on last gen consoles, Epics own “Gears of War” series were all made on udk3 as well as Indie hits like Toxic Games “Qube”. More recently games such as “Daylight” for PC and PS4 by Zombie Studios and another Epic Games title called “Fortnite” were developed in Unreal Engine 4.

Amazon’s Lumberyard is a free AAA game engine which can be used for Android, iOS, PC, Xbox One and PlayStation 4. It is based on CryEngine, a game development kit developed by Crytek. With cross-platform functionality, Lumberyard provides a lot of tools to create AAA quality games. Some of its best features include full C++ source code, networking, Audiokinetic’s feature-rich sound engine, seamless integration with AWS Cloud and Twitch API. Its graphics are accelerated with a range of terrain, character, rendering and authoring tools which help to create photo-quality 3D environments at scale. Pricing is a major competitive advantage of Lumberyard. There are no royalties or licensing fees attached to the game usage. The only cost associated with the tool is the necessary usage of AWS Cloud for online multiplayer games. But that comes with an advantage of faster development and deployment thus, proving worth the cost.

Frostbite is a in-house game engine developed by EA DICE (now managed by Frostbite Labs), designed for cross-platform use on Microsoft Windows, seventh generation game consoles PlayStation 3 and Xbox 360, and now eighth generation game consoles PlayStation 4 and Xbox One.

The game engine was originally employed in the Battlefield video game series, but would later be expanded to other first-person shooter video games and a variety of other genres. To date, Frostbite has been exclusive to video games published by Electronic Arts.

As stated above, the core components of a game engine include a rendering engine, a physics engine or collision detection (and collision response), sound, scripting, animation, AI and may include video support for cinematics.

Graphics Rendering

Rendering is the process where an object in 3d space is converted into a two dimensional image-plane representation of that object.

There are a few rendering methods used to achieve this, and these are:

Rasterisation – rasterising is used to render real time 3d graphics, like games, as it is effective. This works by looking at the thousand of triangles that make up the scene and determines which are visible in the current perspective, with that information it then analises the light sources.

Rasterising does a good job, but is not able to create the same level of detail as raytracing in real time.

Rasterising does a good job, but is not able to create the same level of detail as raytracing in real time.

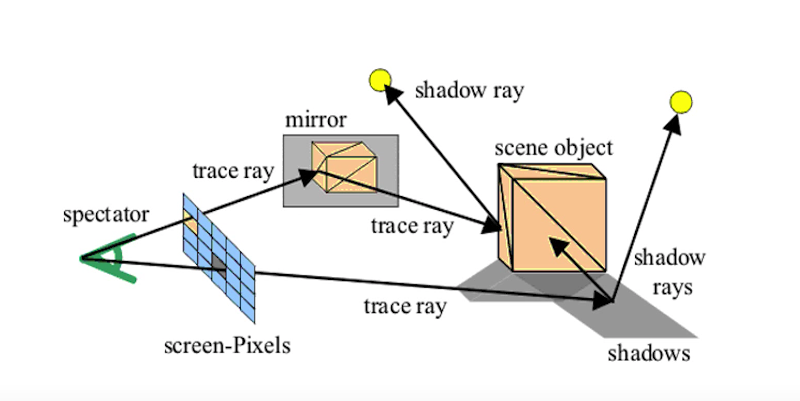

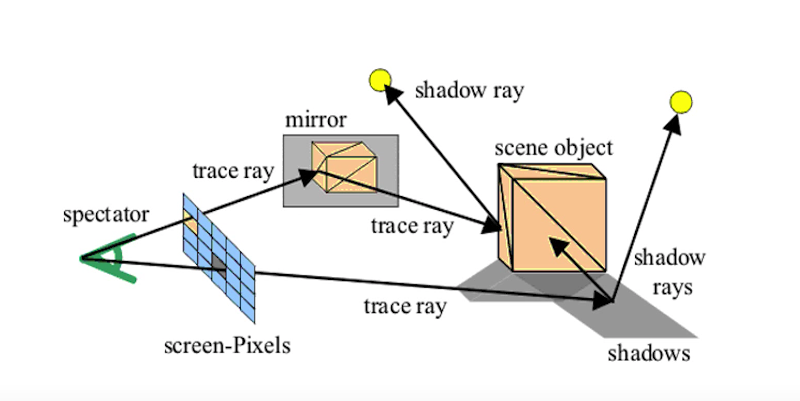

RayTracing – is a rendering technique that is capable of creating photorealistic images from 3d scenes. It works by calculating the path of every ray of light, following it through the scene until it reaches the camera.

It can create very accurate reflection and refraction, but because it calculates every ray and displays for every pixel of the screen, this means that a lot of data needs to be calculated, for this reason ray tracing is not best suited for real time rendering.

It can create very accurate reflection and refraction, but because it calculates every ray and displays for every pixel of the screen, this means that a lot of data needs to be calculated, for this reason ray tracing is not best suited for real time rendering.

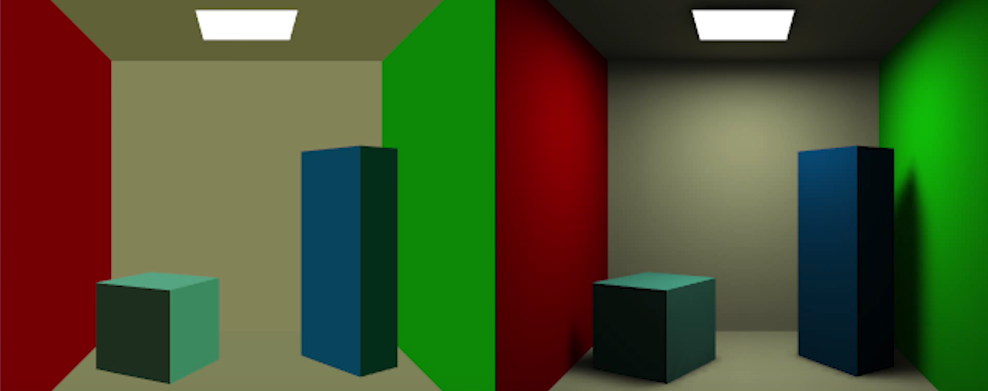

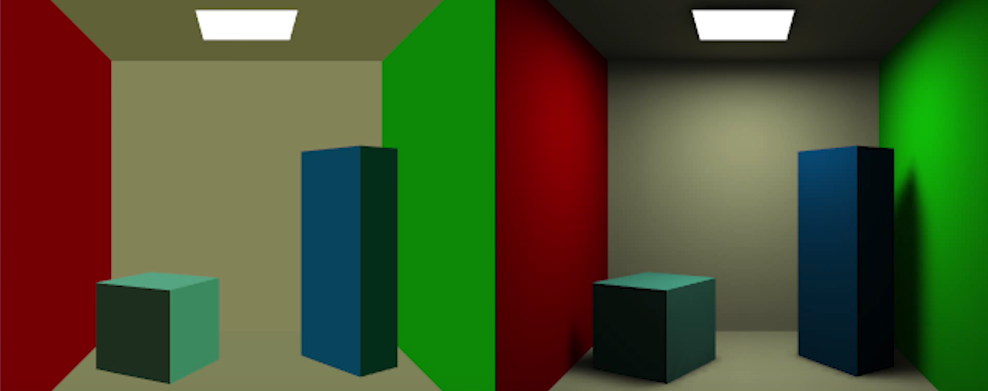

Radiosity – is a rendering technique that focuses on global lighting and how it spreads and diffuses around the scene. This is best used to recreate natural shading.

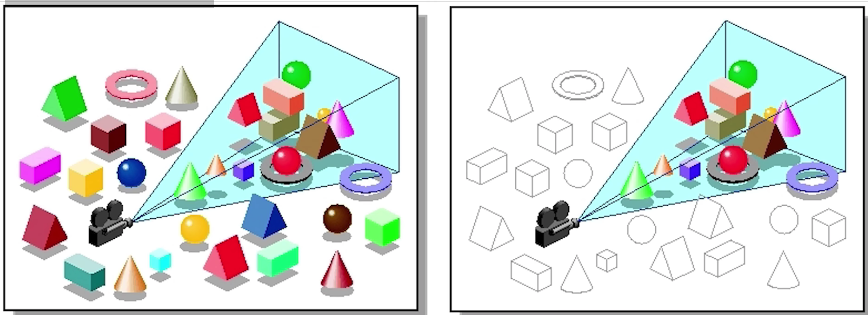

This example shows a scene rendered without and with radiosity.

This example shows a scene rendered without and with radiosity.

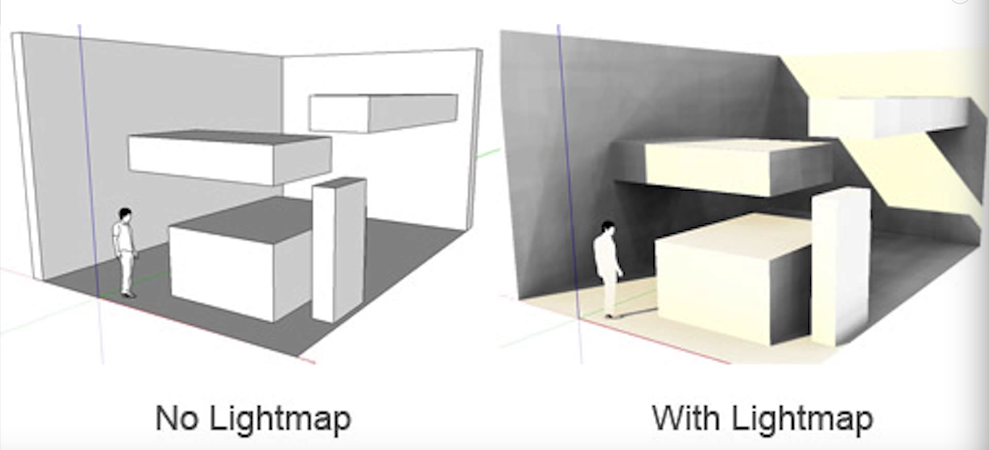

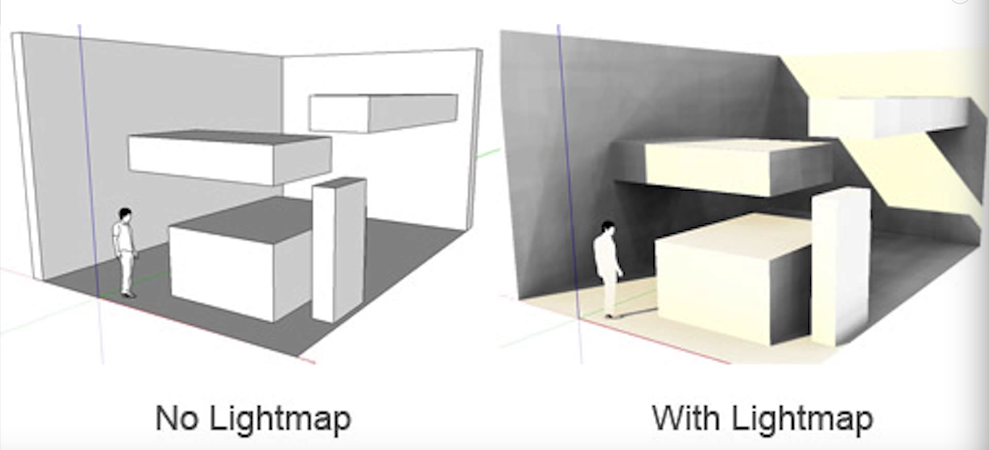

These rendering techniques could be used together to create amazingly realistic scenes that can run in real time. In games this can be achieved with light maps, often used on the static elements of games, such as terrain or architecture. This works by baking the lighting data straight onto the texture.

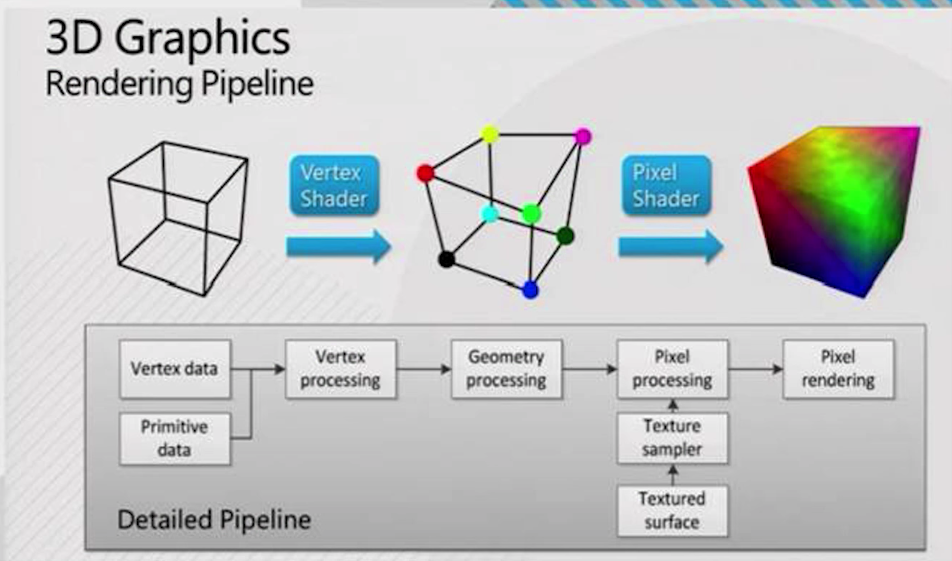

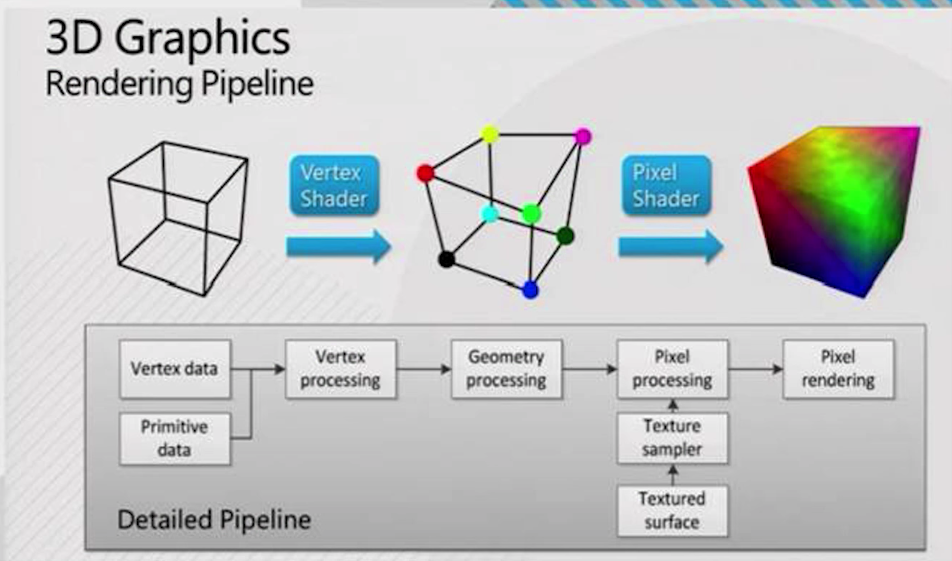

Rendering Pipeline – Shaders

A shader is a small program than runs on a graphics card which manipulates a 3d scene during the rendering pipeline, before the image is shown on screen. These allow for different rendering effects, great for real-time.

Shaders can be Vertex or Pixel:

Shaders can be Vertex or Pixel:

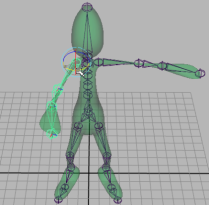

–Vertex

vertex shaders are used to modifiy the position of vertex, colour and texture coordinates in the rending process.

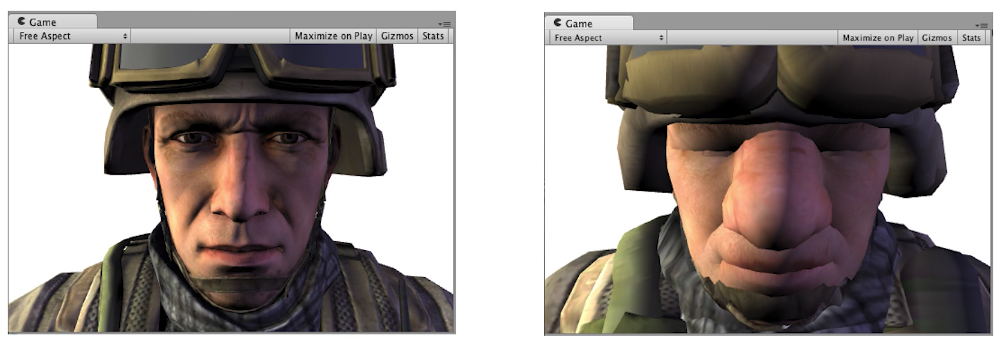

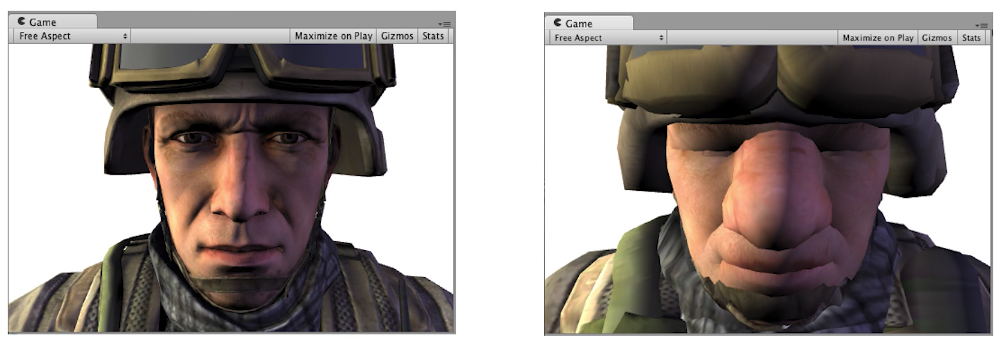

Example of an image before and after vertex shading. Both are the same model, but the second has a shader applied to it that modifies the position of the existing vertices.

Example of an image before and after vertex shading. Both are the same model, but the second has a shader applied to it that modifies the position of the existing vertices.

–Pixel

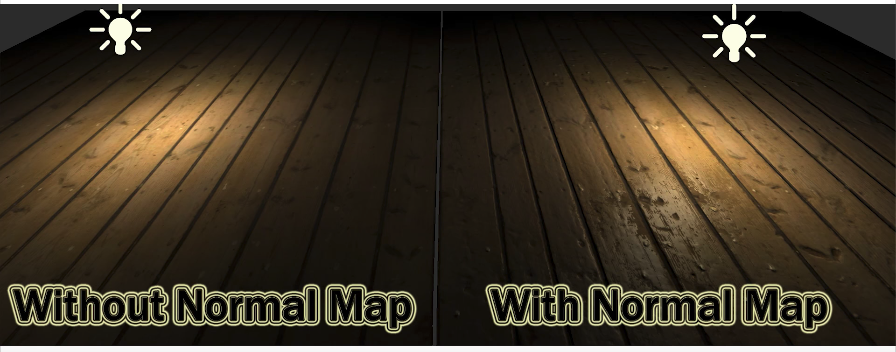

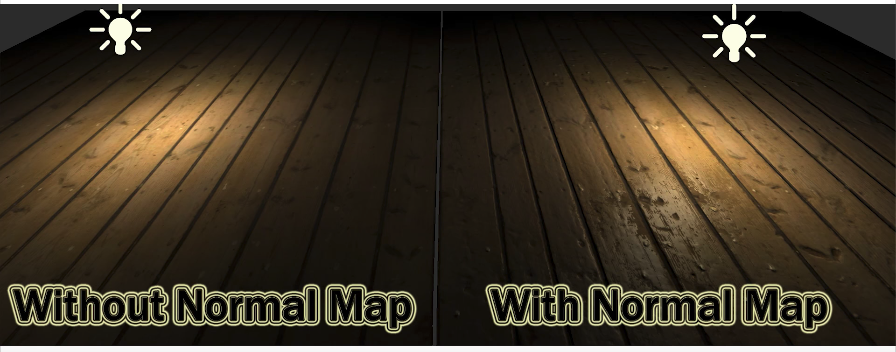

Vertex shaders can make changes to existing vertices, but cannot create new ones. A pixel shader is used to calculate effects on individual pixels (generally used for calculating, colour, translucency, fogging and lighting). The most popular use of pixel shaders are using Normal or Bump maps, which affect how the geometry looks when it reacts to light.

This makes models look more detailed without the need to have a high polygon count.

This makes models look more detailed without the need to have a high polygon count.

Lighting – This is used to illuminate the object and scene. This helps to create realism by using light positioning, brightness and direction.

In 3D programs we have numerous lights to choose from to illuminate our scene.

Ambient

An Ambient light creates a soft/ subtle feel in terms of lighting. It is a combination of both direct and indirect light, for example it can be used to simulate the glow of a lamp (direct) or the lamplight being reflected off of a wall.

Point

A Point light shines evenly in all directions from a small point in space. Point lights can be used to simulate objects such as a star or an incandescent light bulb.

Directional

A directional light shines evenly but in only one direction. The light rays are parallel to one another and can be used to simulate a long distance light source such as the sun, which is viewed from the earth’s surface.

Textures

A Texture is a single file, a 2D static image. It is usually a diffuse, specular or a normal map file that you would create in Photoshop or Gimp, as a tga, tiff, bmp, png file. These can be manipulated photographs, hand-painted textures or textures baked in an application such as xNormals. You would then import these textures into any 3D program and use them as part of a material (you cannot apply textures directly to objects, textures have to be a part of a material).

Fogging

This method is used to hide details of far objects in the world to save up on computing memory. An example of fogging is in GTA IV; when you are looking at a far building your view is limited due to fogging. However the closer you get, the LOD of the object will increase.

Shadowing

Also known as shadow mapping, is the course of action an engine takes when rendering shadows to applied objects. These shadows are done in real-time, depending on if there is enough light within a pixel for it to be projected. In comparison to ‘Shadow Volumes’ (another shadowing technique) Shadow Mapping is less accurate. However Shadow Volumes use a ‘Stencil Buffer’ (temporary storage of data while it is being moved one place to another) which is very time consuming, whereas shadow map doesn’t, making it a faster.

Level of Detail

Level of detail refers to the method of reducing the number of polygons used in 3d models, based on their distance from the player / camera. This technique increases the efficiency of rending by reducing the workload on pc hardware.

Each model represents the same gun. LOD works by how close the model is to the player / camera, choosing the level of detail to show. In a game, if we are up close to the gun we would see the version “LOD 0”; if the gun was on the other side of the map, we would see “LOD 3”.

Each model represents the same gun. LOD works by how close the model is to the player / camera, choosing the level of detail to show. In a game, if we are up close to the gun we would see the version “LOD 0”; if the gun was on the other side of the map, we would see “LOD 3”.

This method is great as it reduces the amount of power spent on rendering high quality models that cannot even be seen properly, making sure to retain an acceptable frame-rate.

Culling

The term Culling refers to not rendering anything that is unnecessary for optimisation. The overall aim of culling is to find out what can be missed during the rendering pipeline, as it will not be seen in the end result. There are different methods that can be used:

Occlusion Culling

Occlusion culling is a feature that disables the rendering of objects that cannot be seen by the camera. The process will go through the scene using a virtual camera to build a hierarchy of potential visible objects, from which the data will be used during runtime by each camera to identify what will and won’t be visible.

Backface Culling

Backface culling is a method used to reduce the amount of polygons drawn in a scene by working out which ones are facing away from the viewer. Detecting and eliminating back-facing polygons from the rendering pipeline reduces the amount of computation and memory traffic.

Contribution Culling

Contribution culling is the process of removing objects that would not contribute significantly to the image, due to their high distance or small size.

Portal Based Culling

Portal Culling is a method by which the 3D scene can be separated into areas called cells that are joined together by Portals – a window in 3d space that allows objects in one cell to be seen from another cell. Portal culling works best in scenes where there is limited visibility from one area to another i.e. a building or cave. When rendering, the camera will be in one of the rooms and that room will be rendered normally but for each portal that is visible in that room a frustum is set for the size of the portal and then the room behind it is rendered.

BSP Trees

A Binary Space Partitioning Tree (BSP) is a standard binary tree that is used to search and sort polytypes in N-dimensional space.

A BSP tree works by dividing and organising parts of a game scene then sorts the parts into a practical formation by arranging the parts of that space into a list from which you can obtain information in relation to how the parts relate to each other.

When playing a game in first person perspective such as Doom or Quake you are required to move around numerous rooms, along corridors or halls (see figure below) and as you move around during gameplay the computer has to continuously redraw everything that you see as your viewpoint changes. Given the fact that the computer has to repetitively redraw the scene constantly, this means that everything must be drawn at a rapid pace otherwise there would be pauses in the motion and the game would seem jumpy.

There may be a high number of polygons per scene and in order for the computer to redraw the scene it has to analyse and decide which polygons should be drawn as there is no need to draw the ones that are not in view. In this instance a BSP tree will be used to sort through and store the polygons of the game world in a way that the computer can access them in swiftly which results in faster rendering during gameplay.

Anti-Aliasing

Anti-Aliasing detects rough polygon edges on models and smooths them out using a quick scan method. The more edges a model has the easier the model can be scanned at once. This method is mainly used on the PS3 rather than the Xbox because it’s a more powerful console and has better processing power. However PC gamer’s may choose to turn of this rendering method because it often slows down the initial performance of your game.

Depth testing

A depth channel (also known as Z depth or Z buffer channel) provides 3D information about an image. It represents the distance of an object from the camera. Depth channels are used by compositing software i.e. you are able to use the depth channel to suitably composite numerous layers whist with regards to the appropriate occlusions. By default Maya generates an image file with three colour channels and a mask channel.

Depth Testing is where occluded pixels are discarded and the concept of overdraw comes into play. Overdraw is the number of times you’ve drawn one pixel location in a frame. It’s based on the number of elements existing in the 3D scene in the Z (depth) dimension, and is also called depth complexity. Depth testing is a technique used to determine which objects are in front of other objects at the same pixel location, so we can avoid drawing objects that are occluded.

Physics Engine

Physics are used to give the game some form of realism to the player. Depending on the game some would need more accurate physics that the other, for example a fighter jet simulation game would use more accurate physics in comparison to ‘Tom Clancy’s – H.A.W.X.’. As of the late 2000′s games are made to look more cinematic and the use of a good physics engine is detrimental for the realism of game.

Collision detection

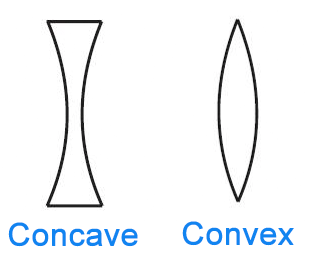

This is the response taken when two or more objects collide with each other. Every game uses features of Collision Detection, however the level of importance it has in a game will vary. A solution for Collision Detection is ‘Bounding Box’ which is a rectangular box surrounding your character or object. The Bounding Box has three values Width, Height and the Vector location and anything that intercepts this invisible square boundary is a sign of collision. Often a favourite for developers as it is mainly used for small objects.

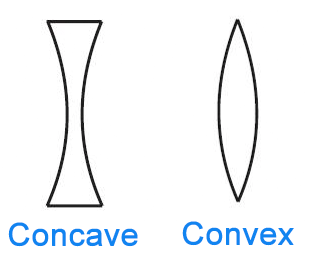

Game Engines can only use convex shapes as collision boxes. If a model “Tapers in”, the engine cannot use a concave bounding box. It will instead use multiple convex boxes together.

Animation System

Path Based Animation

A spline path is a path that is represented by a cubic spline.

We use spline paths in video games to make an NPC’s movement path look ‘lifelike’, it helps if there aren’t any sudden direction changes in ambient conditions, or when making a 3d platformer, to make the character follow a certain path backwards and forwards.

The process of animating one or more objects moving along a defined three-dimensional path through the scene is known as path animation. The path is called a motion path, and is quite different from a motion trail, which is used to edit animations. Path animations can be created in two ways:

The process of animating one or more objects moving along a defined three-dimensional path through the scene is known as path animation. The path is called a motion path, and is quite different from a motion trail, which is used to edit animations. Path animations can be created in two ways:

The term Animation system refers to various methods that are used at different stages during the process of animation such as Particle Systems, Keyframing and Kinematics.

There are two main techniques that are used when it comes to positioning a character for animation, the first is Inverse Kinematics and the second is Forward Kinematics.

-Inverse Kinematics (IK)

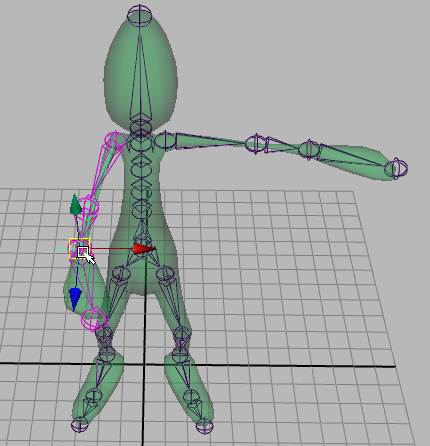

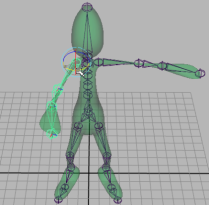

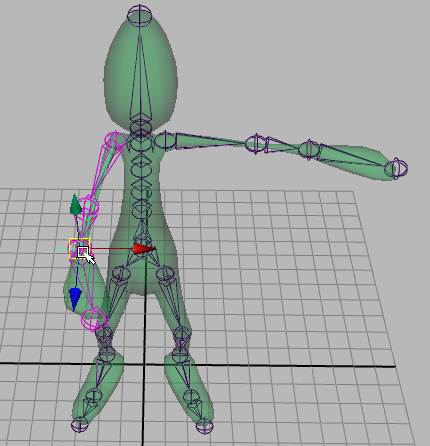

When working with Inverse kinematics you are able to create an extra control structure known as an IK Handle for certain joint chains such as legs and arms. The purpose of the IK handle is to allow you to pose and animate an entire joint chain by moving a single manipulator.

When working with Inverse kinematics you are able to create an extra control structure known as an IK Handle for certain joint chains such as legs and arms. The purpose of the IK handle is to allow you to pose and animate an entire joint chain by moving a single manipulator.

You initially pose the skeleton by moving the IK handles that is located at the end of each joint chain (If you move a hand to a door knob the other joints in the arm will rotate to accommodate the hands new positioning).

On the left, the character is not using IK setups. In the middle, IK is used to keep the feet planted on the small colliding objects. On the right, IK is used to make the character’s punch animation stop when it hits the moving block.

On the left, the character is not using IK setups. In the middle, IK is used to keep the feet planted on the small colliding objects. On the right, IK is used to make the character’s punch animation stop when it hits the moving block.

-Forward Kinematics

Unlike posing with Inverse Kinematics, forward kinematics requires you to rotate each joint individually until you reach the desired position. Moving a joint will have an effect on that particular joint and any joints that are below it in the hierarchy therefore If you wanted to move a hand to a specific place you must rotate several arm joints in order to reach that location.

Unlike posing with Inverse Kinematics, forward kinematics requires you to rotate each joint individually until you reach the desired position. Moving a joint will have an effect on that particular joint and any joints that are below it in the hierarchy therefore If you wanted to move a hand to a specific place you must rotate several arm joints in order to reach that location.

Whilst using Forward Kinematics to animate a complex skeleton it is considered to be strenuous and is not ideal for specifying goal-directed motion it is ideal for creating non-directed motions such as the rotation of a shoulder joint and simple arc motions.

Inverse kinematics is more intuitive for goal-directed motion than forward kinematics because you can focus on the goal you want a joint chain to reach without worrying about how each joint in the chain should rotate.

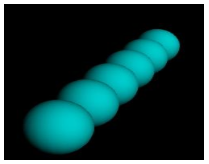

Particle Systems

A particle system is a technique that is used for modelling. It is a collection of minute particles that allows you to create dynamic and fluid objects such as fire, water, clouds and smoke unlike an object that has a well-defined structure and is of a smooth nature.

For each frame that is used in an animation sequence there are a series of steps that need to be followed, this consists of:

1. A new particle is generated

2. Each new particle is assigned its own set of attributes

3. Any particles that have existed for a specific lifetime are destroyed

4. The remaining particles are transformed and moved according to their dynamics

5. An image of the remaining particles is rendered

Typically a particle system’s position and motion in 3D space are controlled by what is referred to as an emitter. The emitter acts as the source of the particles, and its location in 3D space determines where they are generated and where they move to. A regular 3D mesh object, such as a cube or a plane, can be used as an emitter. The emitter has attached to it a set of particle behavior parameters. These parameters can include the spawn rate (how many particles are generated per unit of time), the particles’ initial velocity vector (the direction they are emitted upon creation), particle lifetime (the length of time each individual particle exists before disappearing), particle color, and many more.

A particle can be given a lifetime in frames once it has been created. Once the lifetime reaches zero the particle is then destroyed. This can also be carried out when the opacity/colour is below a certain threshold or when a particle has moved from the region of interest i.e. at a certain distance.

Unreal Engine contains an extremely powerful and robust particle system, allowing artists to create mind-blowing visual effects ranging from smoke, sparks, and fire to far more intricate and otherworldly examples. Unreal’s Particle Systems are edited via Cascade, a fully integrated and modular particle effects editor. Cascade offers real-time feedback and modular effects editing, allowing fast and easy creation of even the most complex effects.

The primary job of the particle system itself is to control the behavior of the particles, while the specific look and feel of the particle system as a whole is often controlled by way of materials.

Emitters Panel – This pane contains a list of all emitters in the current particle system, and a list of all modules within those emitters.

Curve Editor – This graph editor displays any properties that are being modified over either relative or absolute time.

Artificial intelligence

Artificial Intelligence is creating the illusion of an NPC having realistic reactions and thoughts to add to the experience of the game.

A common use of is ‘Pathfinding’ which determines the strategic movement of your NPC. In the engine you give each NPC a route to take and different options to act if that specific route is not accessible, this also takes other things into account like your level of health & the current objective at the time. These paths will be represented as a series of connected points. Unreal Engine 4 uses a math algorithm called A*

Another similar type of AI usage is ‘Navigation’ which is a series of connected polygons. Similar to Pathfinding it’ll follow these connected polygons only moving within the space, however they are not limited to one route. Thus having the space and intelligence to know what objects or other NPC’s to avoid it can take different routes depending on the circumstance.

A fairly new method of AI is ‘Emergent’ which allows the NPC to learn from the player and develop reactions or responses to these actions taken place. Even though these responses or reactions may be limited it does does often give of the impression that you are interacted with a human-like character.

An AI works through a behaviour tree, made up of a root, selectors and sequences. A Root is the starting point and is at the top of the tree (despite working from left to right). A Selector takes the task from the root to the sequences, however if a node fails then it stops the task altogether and exits through the root. The Sequencer is what holds the task nodes.

An example of usage of AI in videogames is Far Cry 2, a first-person shooter where the player fights off numerous mercenaries and assassinates faction leaders. The AI is behavior based and uses action selection, essential if an AI is to multitask or react to a situation. The AI can react in an unpredictable fashion in many situations. The enemies respond to sounds and visual distractions such as fire or nearby explosions and can be subject to investigate the hazard, the player can utilize these distractions to his own advantage. There are also social interfaces with an AI but however not in the form of direct conversation but more reactionary, if the player gets too close or even nudges an AI, the player is subject to getting shoved off or sworn at and by extent getting aimed at. Other social interfaces between AI exist when in combat, or neutral situations, if an enemy AI is injured on the ground, he will shout out for help, release emotional distress, etc.

neural nets and fuzzy logic

Middleware

The middleware acts as an extension of the engine to help provide services that are beyond the engines capabilities. It is not apart of the initial engine but can be hired/rented out for it’s usage which can be for various purposes. There is an engine for any feature in game engines. For example the physics on Skate 3 were terrible, if you were to drop of your board you would bounce or fly to unrealistic lengths. In that circumstance for there next game they may want to hire out ‘Havok’ which is a well established and respected physics engine to help fix this problem. There are also other middle ware engines that you can hire out to assist your games in many ways like Demonware, they are a networking engine who’s sole purpose is to improve your online features.

Sound

Sound in game is detrimental because it’s a notifiable response that can occur from interactions in the game. Another purpose of sound is that it can add more realism to the game by having ambient sounds that make your environment more believable to be apart of. For example if the scene setting is in an army camp you’ll be able to hear marching, guns reloading or being shot, chants, groaning of injured soldiers etc. Or you could include soundtracks that bring out different emotions in the player, for example in Dead Space they use music to shift your emotions from calm to scared in a matter of seconds. Usually games are made and edited outside of the engine, however some engines do include there own auido technology.

This is the difference between sigle-sided and two-sided materials.

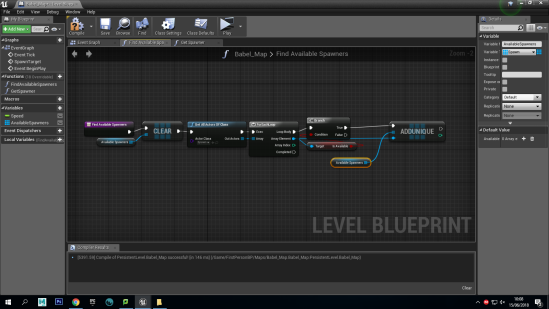

This is the difference between sigle-sided and two-sided materials. The part in the code that is missing, which would fix this problem, is setting the “isAvailable” variable to un-ticked when the target spawns in the level, making that specific spawner unavailable.

The part in the code that is missing, which would fix this problem, is setting the “isAvailable” variable to un-ticked when the target spawns in the level, making that specific spawner unavailable.

After this I added the following code, which would find available spawners every two seconds, spawn a target and set the target to not available.

After this I added the following code, which would find available spawners every two seconds, spawn a target and set the target to not available.

This made the final process a lot easier, since I just duplicated a single piece a few times and re-positioned them to create a high tower.

This made the final process a lot easier, since I just duplicated a single piece a few times and re-positioned them to create a high tower.

People said, because it was so subtle, it seemed like it disappeared in a way when you were moving around and concentrating on shooting targets.

People said, because it was so subtle, it seemed like it disappeared in a way when you were moving around and concentrating on shooting targets. I added mine in the level because it made the whole project look more professional, even though it did prevent the player from playing the game for 8 seconds, which is like a waiter handing a steak to a customer, but waiting 8 seconds before allowing them to eat it. It also was used to give a brief introduction / backstory to the map so you knew what you had to do as soon as you spawned, instead of waiting and figuring it out on your own.

I added mine in the level because it made the whole project look more professional, even though it did prevent the player from playing the game for 8 seconds, which is like a waiter handing a steak to a customer, but waiting 8 seconds before allowing them to eat it. It also was used to give a brief introduction / backstory to the map so you knew what you had to do as soon as you spawned, instead of waiting and figuring it out on your own. Rasterising does a good job, but is not able to create the same level of detail as raytracing in real time.

Rasterising does a good job, but is not able to create the same level of detail as raytracing in real time. It can create very accurate reflection and refraction, but because it calculates every ray and displays for every pixel of the screen, this means that a lot of data needs to be calculated, for this reason ray tracing is not best suited for real time rendering.

It can create very accurate reflection and refraction, but because it calculates every ray and displays for every pixel of the screen, this means that a lot of data needs to be calculated, for this reason ray tracing is not best suited for real time rendering. This example shows a scene rendered without and with radiosity.

This example shows a scene rendered without and with radiosity.

Shaders can be Vertex or Pixel:

Shaders can be Vertex or Pixel: Example of an image before and after vertex shading. Both are the same model, but the second has a shader applied to it that modifies the position of the existing vertices.

Example of an image before and after vertex shading. Both are the same model, but the second has a shader applied to it that modifies the position of the existing vertices. This makes models look more detailed without the need to have a high polygon count.

This makes models look more detailed without the need to have a high polygon count.

Each model represents the same gun. LOD works by how close the model is to the player / camera, choosing the level of detail to show. In a game, if we are up close to the gun we would see the version “LOD 0”; if the gun was on the other side of the map, we would see “LOD 3”.

Each model represents the same gun. LOD works by how close the model is to the player / camera, choosing the level of detail to show. In a game, if we are up close to the gun we would see the version “LOD 0”; if the gun was on the other side of the map, we would see “LOD 3”.

The process of animating one or more objects moving along a defined three-dimensional path through the scene is known as path animation. The path is called a motion path, and is quite different from a motion trail, which is used to edit animations. Path animations can be created in two ways:

The process of animating one or more objects moving along a defined three-dimensional path through the scene is known as path animation. The path is called a motion path, and is quite different from a motion trail, which is used to edit animations. Path animations can be created in two ways: When working with Inverse kinematics you are able to create an extra control structure known as an IK Handle for certain joint chains such as legs and arms. The purpose of the IK handle is to allow you to pose and animate an entire joint chain by moving a single manipulator.

When working with Inverse kinematics you are able to create an extra control structure known as an IK Handle for certain joint chains such as legs and arms. The purpose of the IK handle is to allow you to pose and animate an entire joint chain by moving a single manipulator.

On the left, the character is not using IK setups. In the middle, IK is used to keep the feet planted on the small colliding objects. On the right, IK is used to make the character’s punch animation stop when it hits the moving block.

On the left, the character is not using IK setups. In the middle, IK is used to keep the feet planted on the small colliding objects. On the right, IK is used to make the character’s punch animation stop when it hits the moving block. Unlike posing with Inverse Kinematics, forward kinematics requires you to rotate each joint individually until you reach the desired position. Moving a joint will have an effect on that particular joint and any joints that are below it in the hierarchy therefore If you wanted to move a hand to a specific place you must rotate several arm joints in order to reach that location.

Unlike posing with Inverse Kinematics, forward kinematics requires you to rotate each joint individually until you reach the desired position. Moving a joint will have an effect on that particular joint and any joints that are below it in the hierarchy therefore If you wanted to move a hand to a specific place you must rotate several arm joints in order to reach that location.

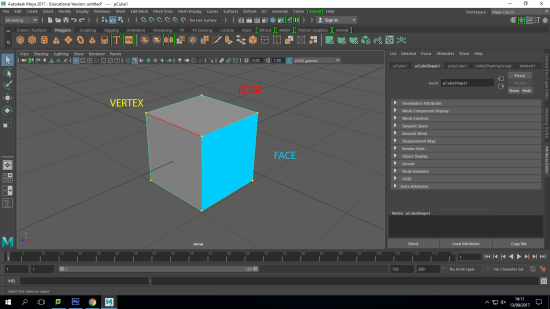

Primitives are the building blocks of 3D basic geometric forms, that you can use as is or modify to your liking. Most software packages build them in for speed and convenience.

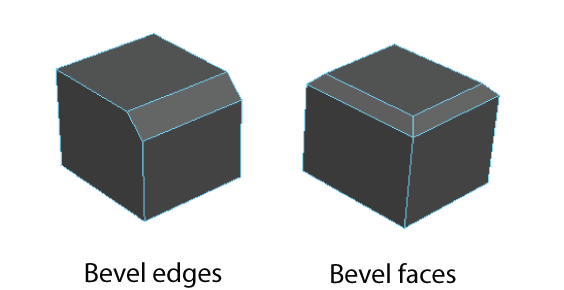

Primitives are the building blocks of 3D basic geometric forms, that you can use as is or modify to your liking. Most software packages build them in for speed and convenience. Bevels expand each selected edge into a new face, rounding the edges of a polygon mesh

Bevels expand each selected edge into a new face, rounding the edges of a polygon mesh The Insert Edge Loop Tool lets you select and then split the polygon faces across either a full or partial edge ring on a mesh. It is useful when you want to add detail across a large area of a mesh or when you want to insert edges along a user-defined path.

The Insert Edge Loop Tool lets you select and then split the polygon faces across either a full or partial edge ring on a mesh. It is useful when you want to add detail across a large area of a mesh or when you want to insert edges along a user-defined path. These two techniques are usually used together to create accurate and complex 3D models.

These two techniques are usually used together to create accurate and complex 3D models.

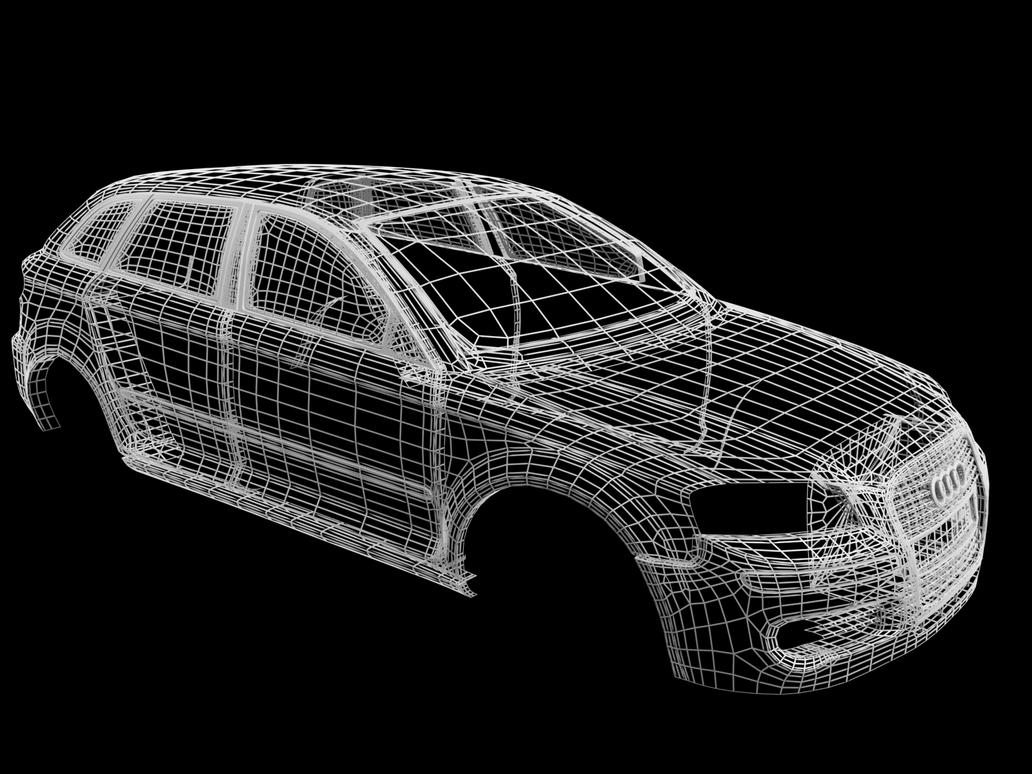

Products are being designed in 3D because it gives the designer great perspective, for example they are able to view the product from all angles rather than just a flat image on paper.

Products are being designed in 3D because it gives the designer great perspective, for example they are able to view the product from all angles rather than just a flat image on paper.

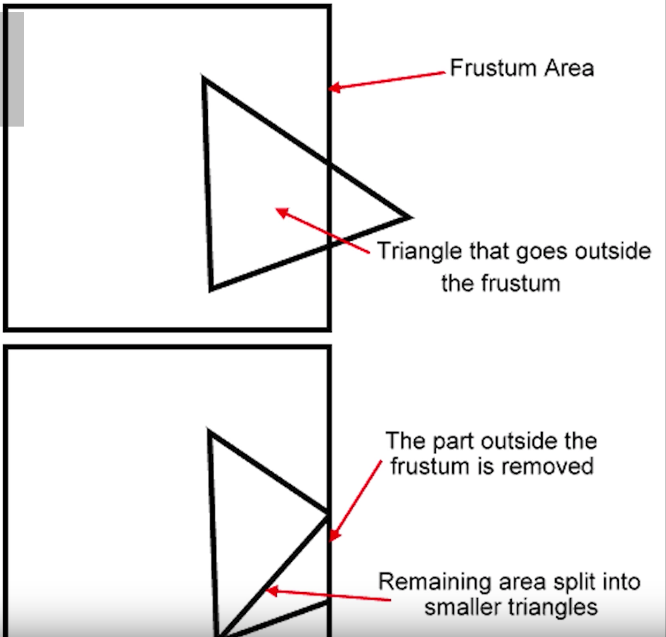

Clipping is where any geometry that lies partly out of the view-frustum is clipped and reshaped.

Clipping is where any geometry that lies partly out of the view-frustum is clipped and reshaped. – Fragment (Pixel) Processor – here, geometry is taken, and the fragments are shaded to comprise the shapes

– Fragment (Pixel) Processor – here, geometry is taken, and the fragments are shaded to comprise the shapes

This frame from Toy Story took 16 hours to render.

This frame from Toy Story took 16 hours to render.

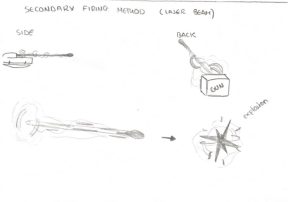

Since I based my Gun from Overwatch, a great example of a weapon that would have similar look and sound design to mine would be Zarya’s weapon.

Since I based my Gun from Overwatch, a great example of a weapon that would have similar look and sound design to mine would be Zarya’s weapon.

The gamecube logo is for sure a cleverly designed logo. If you look at it this way, the blue lines form the letter ‘G’ and the space in between forms the letter ‘C’; these standing for Gamecube.

The gamecube logo is for sure a cleverly designed logo. If you look at it this way, the blue lines form the letter ‘G’ and the space in between forms the letter ‘C’; these standing for Gamecube. George Opperman was the one who designed this logo back in 1972; the iconic “Fuji” Atari logo.It represents a stylised letter ‘A’ to stand for ‘Atari.’ He was inspired by Atari’s popular game at the time, Pong. The two side pieces of the Atari symbol represent two opposing video game players, with the center line of the ‘Pong’ court in the middle.

George Opperman was the one who designed this logo back in 1972; the iconic “Fuji” Atari logo.It represents a stylised letter ‘A’ to stand for ‘Atari.’ He was inspired by Atari’s popular game at the time, Pong. The two side pieces of the Atari symbol represent two opposing video game players, with the center line of the ‘Pong’ court in the middle. One of the most well-known and loved game consoles, PlayStation has the sort of logo that sticks in the mind and makes consumers remember it. The original PlayStation logo, which was unveiled in 1994, featured the familiar incorporated PS symbol. Interestingly enough, the company had not less than 20 versions of the logo to choose from. We can mention three oval shapes colored yellow, red, and blue, as well as several versions focusing around stylised letters “S” and “P”.

One of the most well-known and loved game consoles, PlayStation has the sort of logo that sticks in the mind and makes consumers remember it. The original PlayStation logo, which was unveiled in 1994, featured the familiar incorporated PS symbol. Interestingly enough, the company had not less than 20 versions of the logo to choose from. We can mention three oval shapes colored yellow, red, and blue, as well as several versions focusing around stylised letters “S” and “P”. Nintendo’s logo uses the ”racetrack” typography type. It features all the parts that make the Nintendo logo so recognizable

Nintendo’s logo uses the ”racetrack” typography type. It features all the parts that make the Nintendo logo so recognizable The insignia of the Assassin Order, though varying slightly over different time periods and countries, held essentially the same shape and style of an eagle’s skull. Each of its variations represented the various sects of the Order. The insignia was also part of the armor of leading Assassin figures in a number of time periods.

The insignia of the Assassin Order, though varying slightly over different time periods and countries, held essentially the same shape and style of an eagle’s skull. Each of its variations represented the various sects of the Order. The insignia was also part of the armor of leading Assassin figures in a number of time periods.